Cache

Caching

The caching technique consists of temporarily storing data in a more efficient component to speed up access to information. Then, each time some data are requested and are present in the cache, the latency when accessing it is extremely low.

In situations of high demand, with a large volume of requests, the use of caching brings substantial gains in terms of performance. The API Manager implements caching functionality for any operation (of HTTP method GET) of an API.

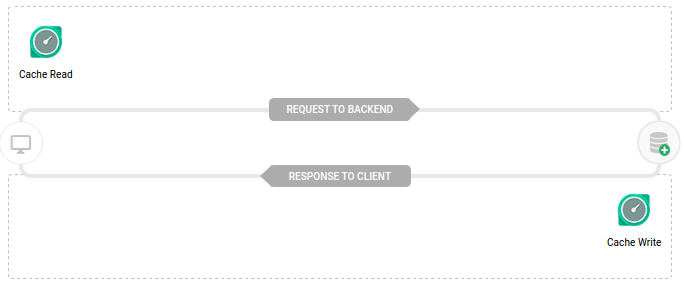

Cache Write and Cache Read Interceptors

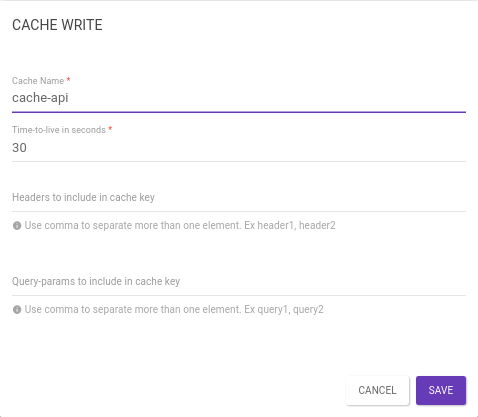

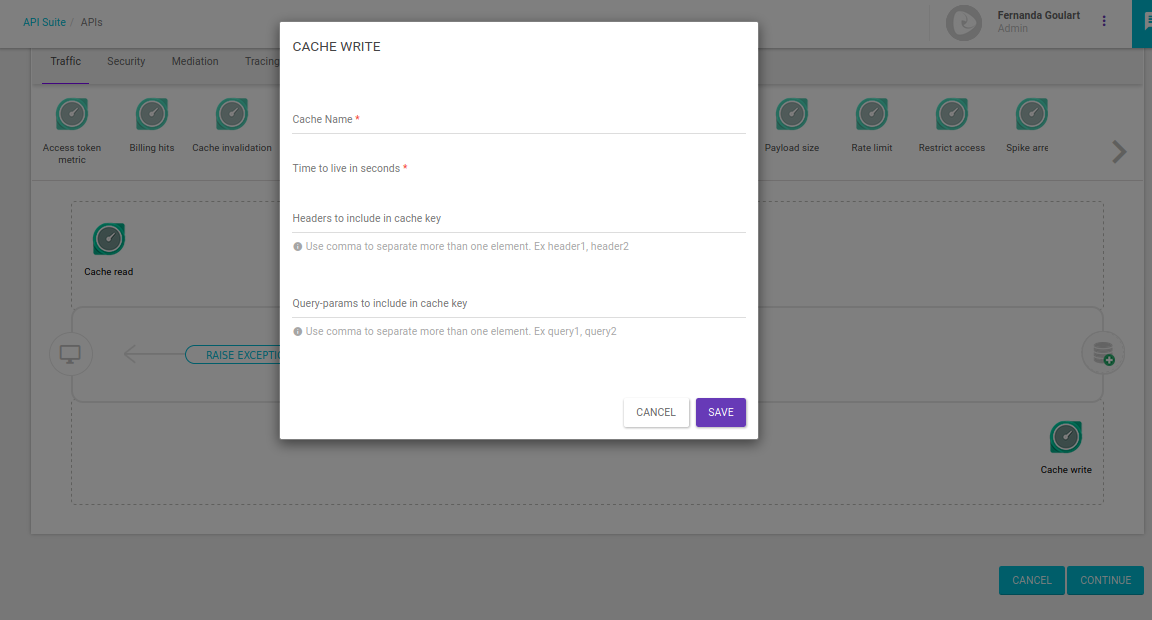

The Cache Write interceptor, when inserted into the response flow of a particular GET operation, will create a cache based on the settings informed. As of the second request, the system will use the response stored in the memory. To enable this, add a Cache Read interceptor in the request flow:

When the caching is configured, header and/or URL attributes can be included to determine the cache entry that should be used, in addition to, of course, the expiration time of the cached data (time-to-live).

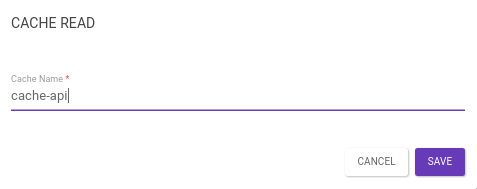

The Cache Read interceptor is responsible for identifying an existing cache by the Cache Name informed. When a request is sent to a cached operation, the interceptor identifies the cache and inserts the in-memory response into the call. This means a performance gain, since the request doesn’t have to be sent to the backend.

| The cache name must be the same as in the Cache Write interceptor. |

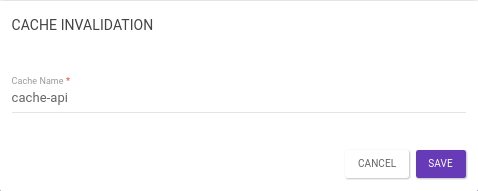

Cache Invalidation Interceptor

The Cache Invalidation interceptor is responsible for invalidating a given cache, based on the Cache Name informed. When inserted into a particular operation, the system will find and delete the cache.

Cache Key

Responses from cache data don’t have to be always the same, they can vary depending on pre-defined elements. The cache key identifies the different responses stored. All elements of the request \(URL, headers, query params, etc\) which influence the response must be part of the key. Elements that don’t influence the response can be left out.

| The URL is always part of the key. |

-

If the response to a call can be globally reused — as a list of cities, for example — then the URL is enough as a key. This means that after the first access to, e.g.,

GET /cities, all other accesses, even by other apps or users, will receive the same response from the cache. -

If the URL is the same, but the response is different depending on the user or app used \(for example,

GET/preferencesreturns different data depending on the user involved in the call\), then that user/app needs to be part of the key. -

Other information that influences the response, such as query params used for filtering (e.g.,

GET/products?orderBy=datevs .GET /products?orderBy=price) also need to be part of the key. -

Paging parameters are also candidates to be part of the key (e.g.,

GET /products?page=1vs.GET /products?page=2).

To inform how the cache key should be formed, the administrator must fill out two fields, after selecting the Cache Write interceptor in the response flow:

-

The Headers to include in cache key field should be a comma-separated list of HTTP headers that should be used to compose the key. E.g.: the value

client_id, access_tokencauses theclient_idandaccess\_tokenheaders to be part of the key. -

The Query-params to include in cache key works like the field above, but specifies query params to be added. In the examples above, the cache key would take into account the query params

orderBy,page.

Sub-Resources

When fetching the cache for existing responses, the Gateway will only consider URLs that are exactly the same as requested.

This means that a rule that configures the cache of the resource /products does not set the cache of the resource /products/{id}; indeed, for the API, both of them are two completely separate resources.

Please note, however, that if a caching rule is created for the resource /products/{id}, then the Gateway will cache different responses to /products/123 and /products/456, as expected.

This means that popular products will often be served from the cache, while less viewed products will not be in the cache and will be routed directly to the backend.

The rules above about headers and query params that are part of the cache are also taken into account for resources, such as /products/{id}.

Time-To-Live

Each cache rule specifies a length of time in which the cache is considered valid. After this time, the responses in this cache are discarded. Please note that the values are in seconds; typically, API response caches can last from a few seconds to a few hours, no more than this.

Maximum size

Internally, the gateway has a practical limit to the amount of bytes it can store in memory. The purpose of this is to protect the gateway’s own infrastructure, so that too large a response will not occupy the memory for too long. In general, this limit is large enough to accommodate many reasonable-sized calls; but if the threshold is reached, new responses will only be stored after some are removed.

Cache Update

The cache is update when its time-to-live expires and a request is made for an operation that has the Cache Write and Cache Read interceptors in its flow. To disable a cache, insert a Cache Invalidation interceptor with the same cache name into an operation.

Share your suggestions with us!

Click here and then [+ Submit idea]