Rate Limit AI Tokens

The Rate Limit AI Tokens interceptor can be used in proxies of APIs that consume Large Language Models (LLMs) to control the consumption of tokens by artificial intelligence applications. In this way, it is possible to increase business efficiency by better managing the costs of these applications and preventing overuse, as well as maintaining the quality of the service by avoiding overloads in artificial intelligence systems.

Below you will learn how to include the Rate Limit AI Tokens interceptor in the flow of your APIs and how to configure it.

Operation and configuration

The Rate Limit AI Tokens interceptor allows you to define the maximum amount of tokens allowed within a period of time. When this limit is exceeded, the call will be denied and a response with the 429 HTTP code will be returned to the client. You can set an additional percentage of calls to be accepted beyond the specified limit and also choose to send a parameter with the number of remaining tokens in the header of the response.

|

The count of tokens performed by the Rate Limit AI Tokens interceptor is just an estimate, and works in a similar way to the OpenAI Tokenizer tool. Therefore, if your API is directly connected to the body sent to the LLM, the value calculated by the interceptor may not match that computed by the OpenAI API. This may be due to the fact that the OpenAI API, in addition to the string corresponding to the content of the message (prompt), also takes other elements present in the body of the request into account when calculating the tokens consumed. OpenAI itself warns in this article about the difficulty in estimating the number of tokens. |

The interceptor can be included in the request flows (REQUEST TO BACKEND) of an API revision or a plan, for all resources and operations, as well as for specific resources and operations.

| See more about API flows. |

To insert the Rate Limit AI Tokens interceptor into the flow, click on its icon, located in the AI category on the Edit Flow screen, and drag it to the request flow (REQUEST TO BACKEND), as in the animation below:

A modal window will open for you to provide the following information:

-

Location: select the location in the request where the information about the tokens will be sent (Cookie, Header, Query Param or JSON Body).

-

Location Name: enter the name of the field selected in Location. In the case of

JSON Body, you can enter the exact name of a JSON field or use JSONPath expressions, which allow you to access fields or arrays dynamically and flexibly in complex JSON structures. See usage examples for this field. -

Provider: select the provider of the LLM used (at the moment, only models provided by OpenAI are accepted).

-

Model: select the LLM used.

-

Tokens: enter the maximum number of tokens accepted within the specified timeframe.

-

Interval: select the timeframe within which the specified limit of tokens will be accepted.

-

Soft Limit: allows you to set an additional percentage of tokens to be accepted. To do this, check the box and add the desired value in the % field on the right.

|

If the Soft Limit option is checked, the % field becomes mandatory. Otherwise, the interceptor will operate normally, based on the limit set in the Tokens field. |

-

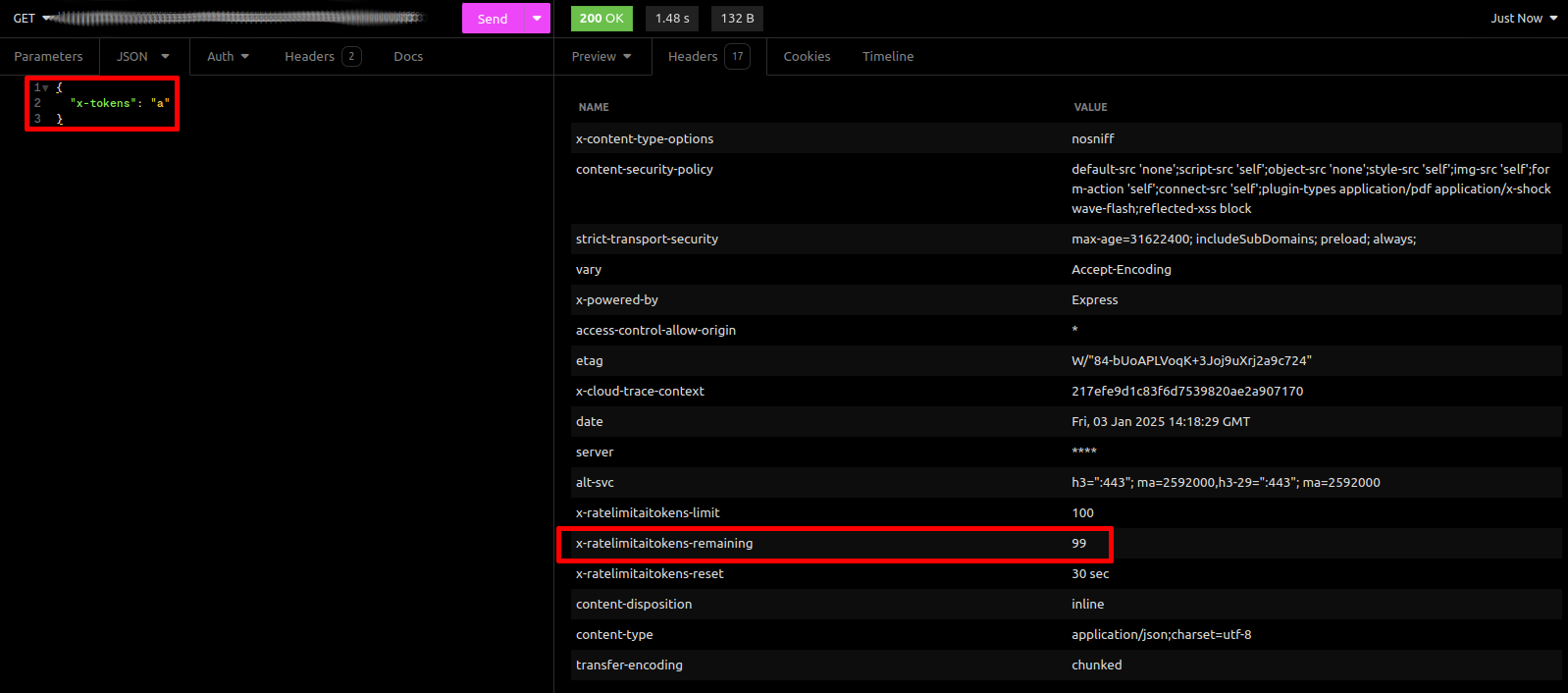

Return quota header?: if checked, a parameter with the remaining amount of tokens will be sent with the header of the response, as in the image below:

|

Usage examples for the Location Name field

Any type of data to be processed by the interceptor, be it code, JSON or structured text, must be converted to string before being sent. If the value is not a string:

Why does the bypass occur? The interceptor can only calculate tokens for values of type string. Fields that are arrays, objects, null values or other types cannot be processed. So, to avoid request failures in these cases, the setting of the Rate Limit AI Tokens interceptor is simply ignored. How to avoid the bypass? Make sure that the evaluated field is always a string. In the case of objects or arrays, use serialization or extract the necessary values with tools such as JSONPath (see below), ensuring that the result is a string.

The counting of tokens is not performed directly on objects or arrays. All content must be serialized as strings, since the OpenAI API itself only accepts strings.

If you want to compute the tokens of a specific field within a JSON, you must clearly indicate the desired field (using the literal name or a JSONPath expression, as shown below). It is essential that the extracted value be a string. |

When selecting JSON Body in the Location field of the interceptor configuration form, there will be these two possibilities for the Location Name field:

-

Exact field name: Directly enter the exact name of the desired field in the JSON.

-

JSONPath expression: Enter an expression beginning with

$.to access data in the JSON dynamically.

If the entered value starts with $., it will be interpreted as a JSONPath expression.

Otherwise, it will be treated as the exact name of a JSON field at the root level.

Here are some examples:

Example 1: Simple JSON

{

"model": "gpt-4o",

"version": "v1.0",

"content": "Qual é o clima hoje?"

}

-

Literal field:

content→ Result:Qual é o clima hoje?(total: 6 tokens, 20 characters) -

JSONPath:

$.content→ Result:Qual é o clima hoje?(total: 6 tokens, 20 characters)

Example 2: JSON with arrays

Input JSON:

{

"model": "gpt-4o",

"messages": [

{

"role": "system",

"content": "Responda sempre com ironia"

},

{

"role": "user",

"content": "Aqui está um JSON, você pode me ajudar a entender os itens?\n\n{\"items\": [{\"id\": 1, \"value\": \"primeiro\"}, {\"id\": 2, \"value\": null}, {\"id\": 3}]}"

}

]

}

-

Literal field:

messages→ Result: the count will be ignored becausemessagesis an array, not a string. -

Literal field:

model→ Result:gpt-4o(total: 5 tokens, 6 characters) -

JSONPath:

$.messages[*]→ the count will be ignored becausemessagesis an array of objects, not a string. When using alternatives like$.messages[*], the entire array will be returned, which causes a bypass, since the result must be a string. -

JSONPath:

$.messages[0].content→ Result:Responda sempre com ironia(total: 7 tokens, 26 characters) -

JSONPath:

$.messages[1].content→ Result:Aqui está um JSON, você pode me ajudar a entender os itens?\n\n{\"items\": [{\"id\": 1, \"value\": \"primeiro\"}, {\"id\": 2, \"value\": null}, {\"id\": 3}]}(total: 54 tokens, 157 characters) -

JSONPath:

$.messages[*].content→ Result:Responda sempre com ironia+Aqui está um JSON, você pode me ajudar a entender os itens?\n\n{\"items\": [{\"id\": 1, \"value\": \"primeiro\"}, {\"id\": 2, \"value\": null}, {\"id\": 3}]}(total: 61 tokens, 183 characters) -

JSONPath with conditional:

$.messages[?(@.role=="user")].content

-

messages: The name of the array in the JSON. -

[?(@.role=="user")]: A conditional filter that checks if therolefield has the value"user".-

@: Represents the current item in the array. -

role=="user": The condition we are checking.

-

-

.content: The field we want to extract from the object that met the condition. -

Result (total: 54 tokens, 157 characters):

Aqui está um JSON, você pode me ajudar a entender os itens?\n\n{\"items\": [{\"id\": 1, \"value\": \"primeiro\"}, {\"id\": 2, \"value\": null}, {\"id\": 3}]}

Example 3: Nested JSON

{

"user": {

"id": 123,

"profile": {

"name": "João",

"preferences": {

"notifications": true

}

}

}

}

-

Literal field:

user→ the count will be ignored becauseuseris an object, not a string. -

JSONPath:

$.user.profile.name→ Result:João(total: 2 tokens, 4 characters) -

JSONPath:

$.user.profile.preferences.notifications→ Result:true(total: 1 token, 4 characters)

Example 4: JSON with null and missing values

{

"items": [

{"id": 1, "value": "primeiro"},

{"id": 2, "value": null},

{"id": 3}

]

}

-

Literal field:

items→ The count will be ignored becauseitemsis not a string. -

JSONPath:

$.items[*].value→ Result:["primeiro", null](total: 2 tokens, 8 characters) -

JSONPath:

$.items[?(@.id==2)].value→ Result:[null](total: 0 tokens, 0 characters) -

JSONPath:

$.items[3].value→ Result: An exception, corresponding to an HTTP status code400(Bad Request), will be raised stating that the field was not found.

Share your suggestions with us!

Click here and then [+ Submit idea]